Commits on Source 6

-

Arjo Segers authored

-

Arjo Segers authored

-

Arjo Segers authored

-

Arjo Segers authored

-

Arjo Segers authored

-

Arjo Segers authored

.gitignore

0 → 100644

bin/dhusget.sh

deleted

100755 → 0

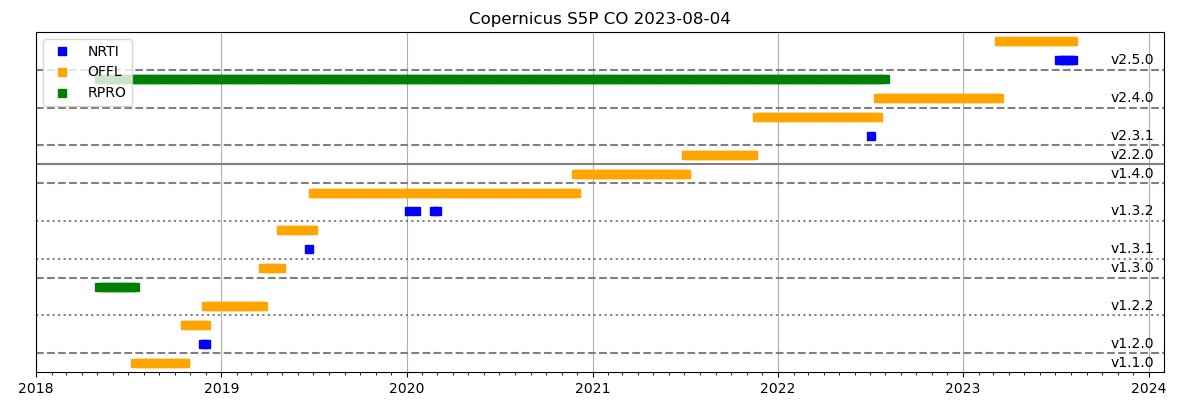

| W: | H:

| W: | H:

14.2 KiB

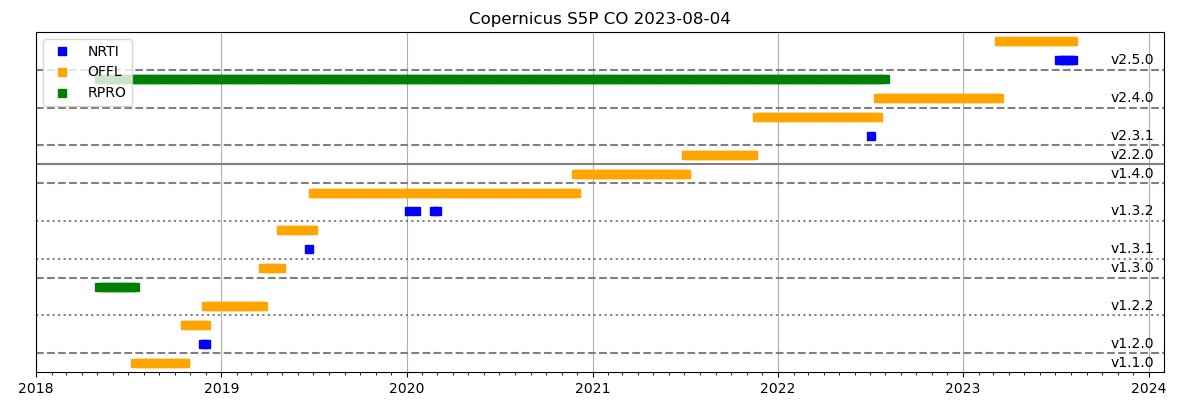

| W: | H:

| W: | H:

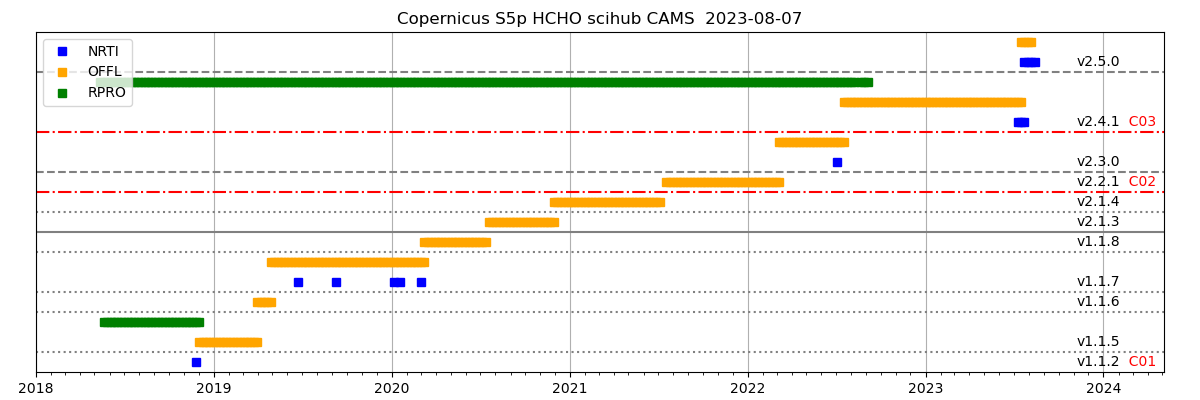

| W: | H:

| W: | H:

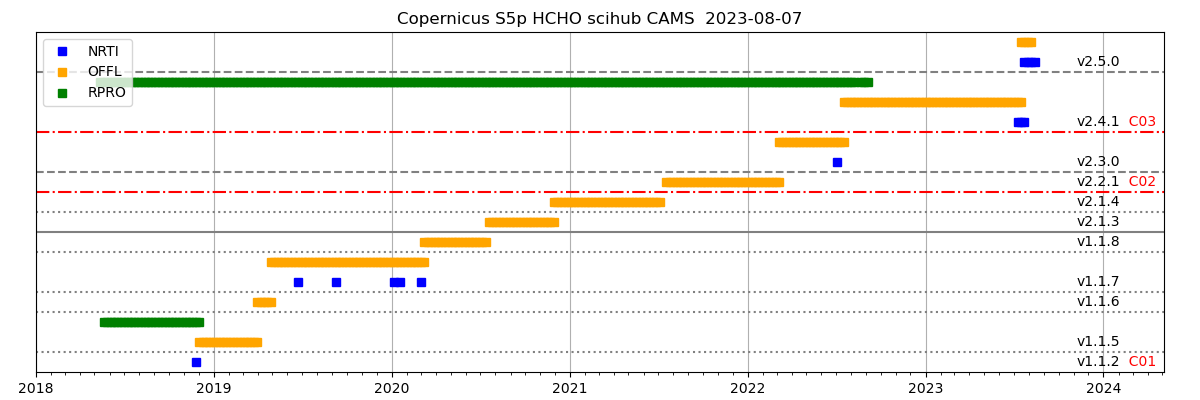

| W: | H:

| W: | H:

| W: | H:

| W: | H: